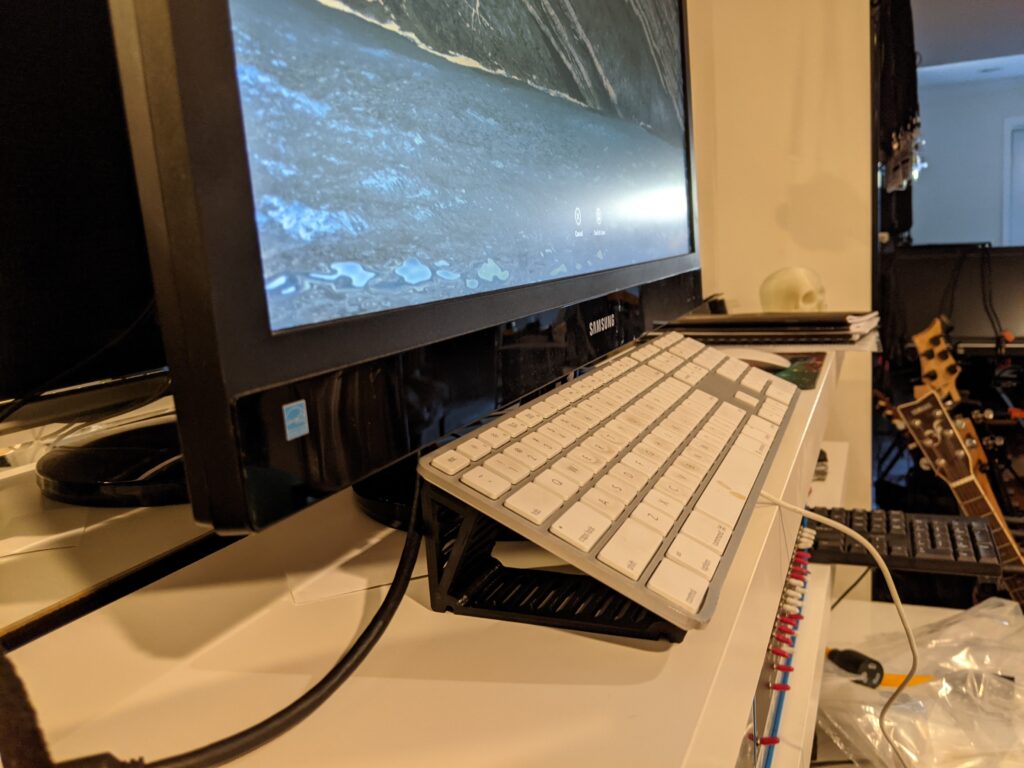

I’ve been using Octopi with my 3D printers for almost as long as I’ve been printing. The whole concept of printing from SD cards just seems alien to me, when Octopi/Octoprint jumps through all the hoops for you. I mean ALL the hoops. Upload your gcode to a web interface, set the print, watch the print, manage temperatures… there is even a spaghetti detection plugin!

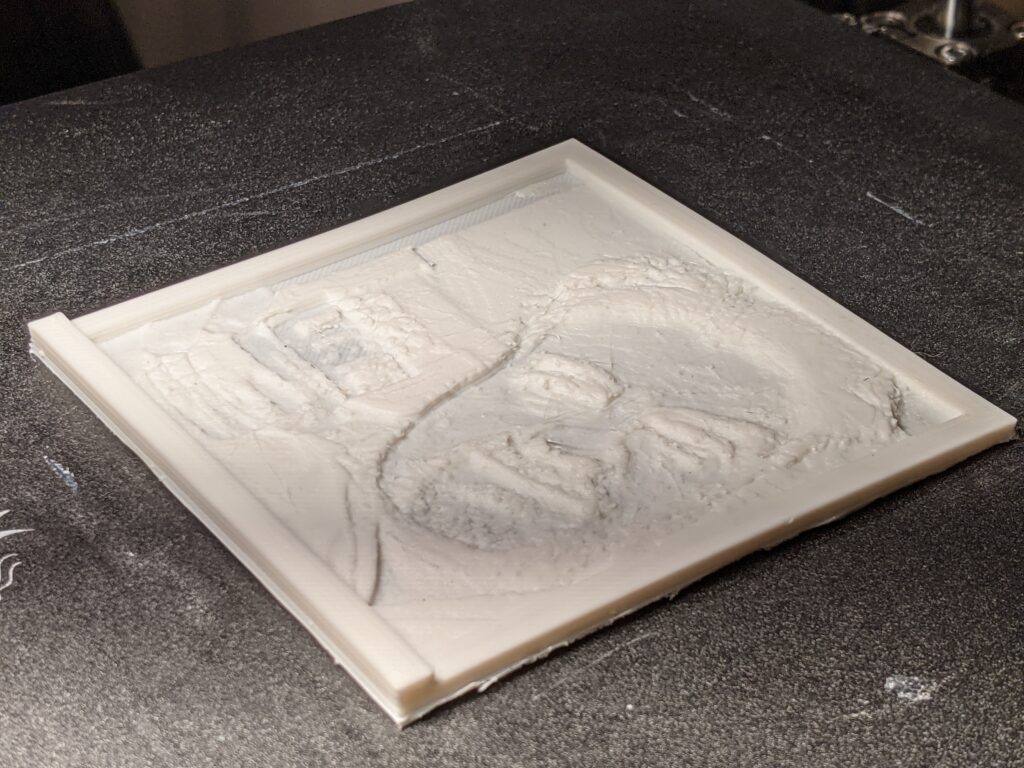

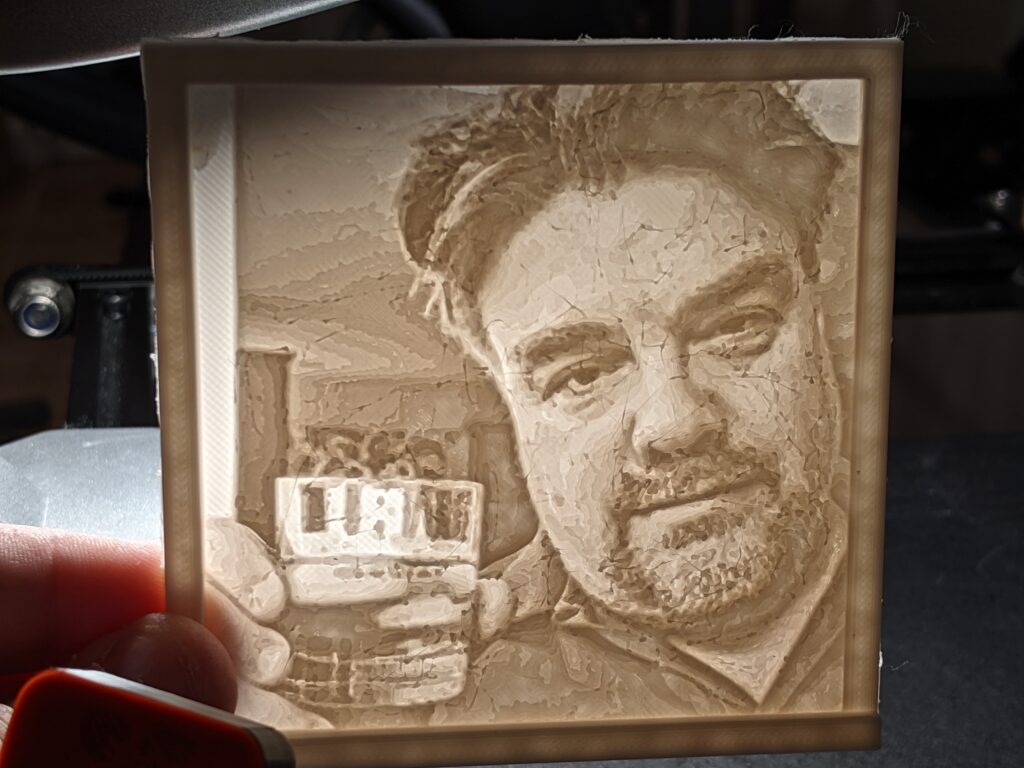

One of the biggest benefits is camera integration. Why? To monitor progress, to create stunning time-lapses. The technology has advanced so much that the Octolapse plug-in can detect when the Z-layer changes, move the extruder to an out-of-the-way corner, and take a snapshot of the current state of your print, then continue printing as if nothing happened. This results in beautiful yet creepy time-lapses where the object simply appears to grow out of thin air.

The typical thing to do is integrate directly with the Pi camera. It is perfectly utilitarian, and does the job. I haven’t been super happy with the Pi camera, however. Until recently, I had cobbled together systems to hold a camera in place using helping hands, or whatever other makeshift device I had on hand. Then I 3D-printed a mount to do the same thing. But the quality’s just not there. The resolution is inferior, it doesn’t handle low-light well, and it can’t pan, tilt or zoom, so you’re stuck with manual adjustments.

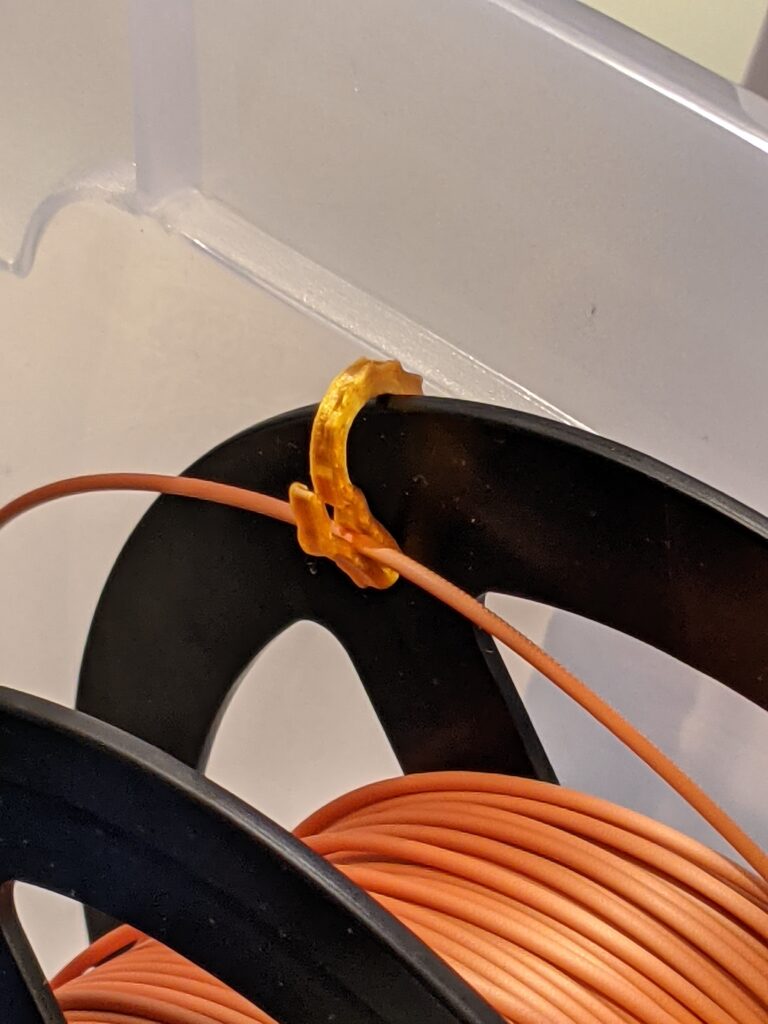

Then I saw that someone had created a frame mount for a Wyze Cam-Pan for the Ender 5. The Cam-Pan can be found for under $30, and has full HD and PTZ capabilities. Also records and speaks audio, not that I’d need that here. So I printed one, and ordered one, before researching how to integrate it.

Well, by default, the Cam-Pan wants to work like a Ring camera and send its output to the cloud. SAAS is king, apparently. But wait. Wyze offers RTSP firmware for it. That makes it simple, right? Well, not so fast. It makes a decent stream, but it doesn’t seem that Wyze’s RTSP firmware offers the still-image function which is required by the Octolapse plug-in.

Another option Wyze offers is USB Webcam firmware. But that requires a clunky additional wired connection, a USB-A to USB-A cable, from the Pi to the Wyze camera. HATE IT.

Started talking with Kevin about reverse-engineering the Wyze firmware to see if there was hidden functionality, but then I remembered that everything has already been done. So I googled Wyze camera reverse-engineering. First I found some very confusing custom firmware made for the Wyze V1 and V2 cameras. This was getting closer, but I’m specifically looking for the Cam-Pan version. I started reading the “issues” section of the github for that release, which hadn’t been updated in three years, and people were wondering why it exists at all, since at its core it’s a no-further-benefit fork of Xiaomi Dafang firmware for Wyze, which is better documented, more thorough, specifically known to support the Cam-Pan, and was updated just four months ago.

Fast forward a couple hours, and I did it:

- I flashed the custom bootloader. It’s smart. if it detects the SD card with the custom software on it, it runs that. Else it runs the built-in Wyze version. BRILLIANT.

- I created the custom SD card, editing wpa_supplicant.conf to connect to my wifi.

I booted it up, and was dismayed. It clearly “works,” in that ssh, web and rtsp ports are open by default, but this was clearly written before the great clampdown on TLS. A self-signed cert with an untrusted root cert, whose name will never match on the common name. I spent at least an hour going down that rabbit hole and trying to bypass it, but here’s the thing. Not only does it have to work on my browser, but it also has to work from the Octoprint installation on the Pi. Since I now have SSH access to the Wyze camera via its new firmware, I logged in to see if it would be easier to just “replace the certs.”

Sure enough, it all hinges on just a cacert and a lighttpd cert. I figured I had little to lose at this point, so I generated a new cert for it, signed by my infrastructure (what, doesn’t everyone run FreeIPA in their basement in 2021?), and dropped these new and authenticated certs into place. I power-cycled the camera. IT CAME BACK UP. And now, at least it ALLOWS me to bypass SSL/TLS errors (apparently my FreeIPA server isn’t too smart about daylight savings time, so I still have twenty minutes before that cert is valid). (This was incorrect, as I discovered today. My infrastructure was using an ntp server that had been removed from my network, and has been WRONG for some time now. I fixed that today!)

The point of the whole TLS exercise is so that I can add the camera to Octolapse, which actually does a verification check to ensure it can read both the stream and the “capture current pic” snapshot link. I suppose I also could have figured out how to turn off https entirely on lighttpd, but I was already nearly done when I thought of that option. Also, it’s not a full linux deployment on that firmware. It’s a busybox/mips stripped down linux with all the configs living on the SD card.

I ended up disabling SSL support anyway, because why not?

The next problem — it looks like Octoprint/Octolapse, between the two of them, need the feed in lots of different ways. The main “control” screen in Octoprint needs it in http/mjpeg — no other feed type will work here and show the current moving image. There are -three- settings within Octolapse — base address, stream and snapshot. And I’m pretty sure the stream option here would take an rtsp stream. The snapshot, however, is ghoulish, in that it needs an actual snapshot function. Why not just build in a function that takes a curent snapshot from the stream? Oh well, I don’t know the capabilities that well, there must have been a good reason for that choice.

Meanwhile, the camera won’t do http mjpeg with any of the firmware I’ve tested so far. The workaround for this seems to be back to the beginning thoughts — use ffmpeg and ffserver on the Pi itself to suck in the rtsp stream and serve it on a different port locally as mjpeg. Again, it seems like an awful lot of load to do something so seemingly simple. But packetwise, they’re staying local, it would be sucking that feed into Octopi anyway. BTW, ffserver requires a specific older version of ffmpeg and is a 2-4 hour compile on the Pi.

After managing to get it “working” with the mjpg custom firmware, I wasn’t happy with the result. The network overhead of the Pi streaming the video from the camera and then re-streaming it internally was too much, and the image ended up being glitchy and problematic. I also think having ffmpeg and ffserver was adding a notable load to the Pi.

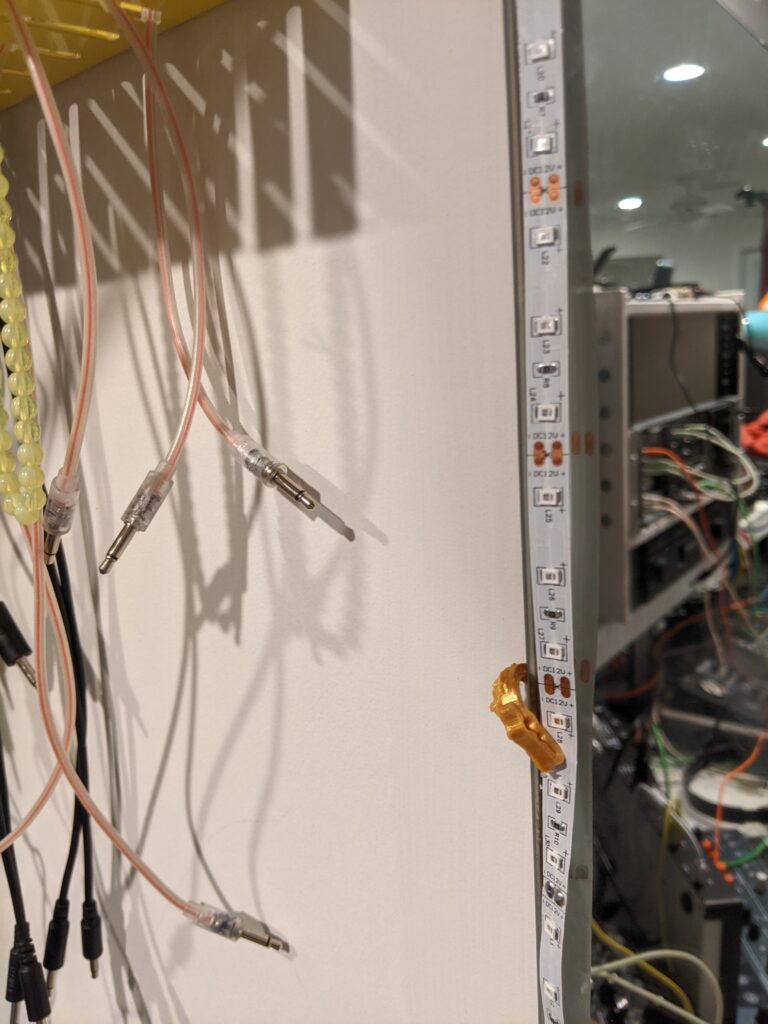

So I broke down and bought the USB-A to USB-A cable and flashed the Wyze camera with the Wyze USB-Cam version of the software. Then I ran raspi-config to turn off Pi Camera support. After setting Octoprint back to the Webcam profile and rebooting it, it just picked it up naturally. I did install the uvc software so that I could tweak the settings, but I’m not 100% that was necessary for the camera to work. In any case, I have great video quality and can now tweak some of the exposure and white balance settings on the camera for more dramatic timelapses.

I guess that’s a wrap. Check out the DC540 youtube for future timelapse videos.