If you’ve been following along, we had a working CTF server on a beefy retired desktop server. It was brought to the July meeting in hopes of its debut, but a dead CMOS battery thwarted us.

Since I never like to do anything halfway, I got to thinking about what it would take to make that system more portable. This series of posts will document the process of bringing this to fruition.

Hardware

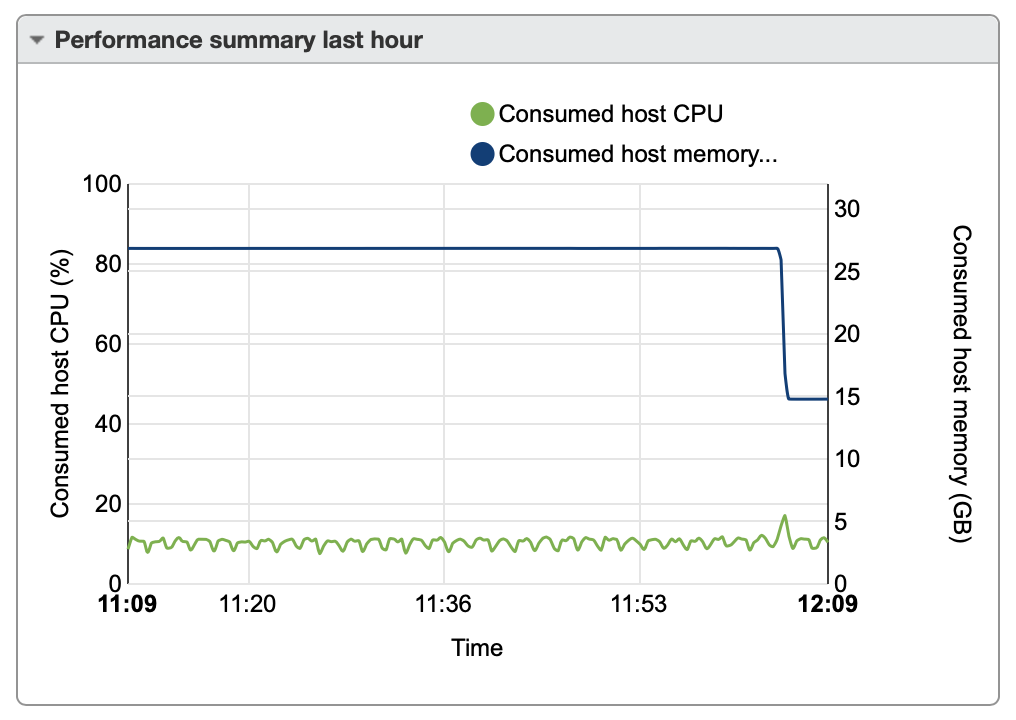

The old system was an i7-920 (4 cores, 8 threads, 2.66GHz). It was about nine years old, hence the dead CMOS battery. It was loaded with 16GB of RAM and a 500GB HDD. Skimpy by today’s server standards, but plenty of horsepower to run a dozen vulnerable VMs and the infrastructure needed to support CTFs.

I set out to get the most possible bang for my buck, with the primary factor being portability. I looked at SFF desktops and USFF desktops, and then someone I work with suggested a NUC. I started looking at specs, and realized that the latest NUC, the Hades Canyon, could FAR exceed the capabilities of that giant desktop, while fitting into a messenger bag. I started a GoFundMe to help make the purchase, since this is for the group, not for personal use, and several members donated. Not being someone who is big on patience, I jumped the gun and made a purchase, but I’m leaving the GoFundMe up. Hopefully as members and meeting attendees see the benefit of this platform, more of them will step up and offset the costs.

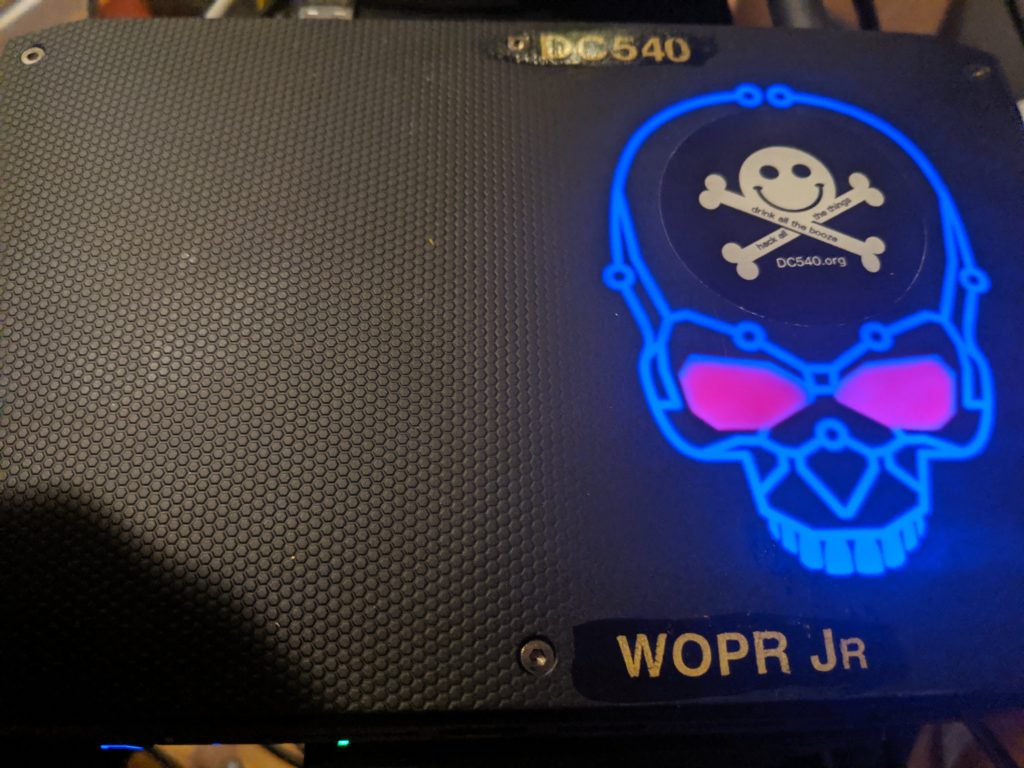

What I ended up with, I bought in kit form. I bought the 100W NUC8i7HVK barebones chassis, which includes the case, the motherboard, an i7-8809G processor (4 cores, 8 threads, 3.2GHz, overclockable to 4.2GHz — 82% faster according to UserBenchmark), two ethernet ports, and lots of options for video and USB. Max RAM for these is 32GB, which I ordered as Corsair Vengeance modules — so, twice the RAM of the retired desktop. Lastly, the HC comes with two M.2 slots (three, actually, but one is taken up by the wireless card). I populated the remaining two with a pair of Intel 660P NVMe SSD units in the 2TB version. I’m not even going to estimate the improvements of NVMe SSD over platter drives.

I did a bit of research before choosing an operating system. I would have been perfectly comfortable with ESXI, like the old machine, but the HC has a really gorgeous color-controllable skull LED on the top cover that probably wouldn’t be controllable from a VM. I know that’s kind of a vain reason to choose a virtualization platform, but KVM performance these days, on the right hardware, is probably right there on part with ESXi, and arguably easier to write management scripts for. Combine that with the benefit of having a front-end OS for display, monitoring and LED management, as well as configuring of external connections, and I think I came out on the right side.

I ended up choosing Ubuntu for the base OS, because it seems like enough adventurous nerds have done the research on getting the most out of the NUC with it that it wouldn’t be a long pull to get it rolling, and I think I made the right choice there. I followed these instructions to get Ubuntu 18.04LTS installed onto the NUC (I also had to upgrade the firmware of the NUC itself, and add NOMODESET to the boot options during install and permanently) and making use of the Vega M integrated graphics. I read elsewhere that Ubuntu 18.10 would install with much less effort, but I really wanted an LTS release for this project.

I purchased a tiny monitor to continue portability and allow troubleshooting on-the-fly, along with a folding keyboard.

The install went well, and the libvirt/KVM install was completely uneventful. Stay tuned for Part 2, where things start to come together, take shape, and get really exciting…